A Deep Dive into the Heart of Transformers

In the realm of deep learning, the Self-Attention Mechanism has emerged as a transformative concept, paving the way for the development of advanced models like Transformers. Originally introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017, the self-attention mechanism has since become a fundamental building block in natural language processing, computer vision, and various other domains. This article aims to provide a comprehensive understanding of the self-attention mechanism, exploring its intricacies, applications, and the role it plays in enhancing model performance.

The Essence of Self-Attention:

1. Motivation:

- Idea: The self-attention mechanism addresses a crucial limitation in traditional sequential models, which struggle to capture long-range dependencies within sequences.

- Benefit: By allowing each element in a sequence to attend to all other elements, the self-attention mechanism enables models to weigh the importance of different parts of the input when processing each element.

2. Key Components:

- Attention Scores: For each element in the sequence, attention scores are computed based on its relationship with all other elements. These scores determine the level of attention each element should pay to others.

- Softmax Function: The softmax function is applied to the attention scores, converting them into a probability distribution. This ensures that the weights assigned to different elements sum to 1.

- Weighted Sum: The elements in the sequence are then multiplied by their respective attention scores and summed, resulting in a weighted sum that represents the context for each element.

Understanding the Mechanism:

1. Mathematical Representation:

- Input Sequences: Suppose we have an input sequence (x1, x2, …, xn) where each xi is an element in the sequence.

- Attention Scores: The attention scores are calculated using a set of learnable parameters. The scores for element xi are represented as a1i, a2i, …, ani.

- Weighted Sum: The output for element xi is obtained by taking the weighted sum of all elements in the sequence, where the weight for element xj is determined by the attention score aji.

2. Parallelization:

- Benefit: One remarkable aspect of the self-attention mechanism is its parallelization capability. Each element in the sequence can be attended to independently, allowing for efficient computation and scalability.

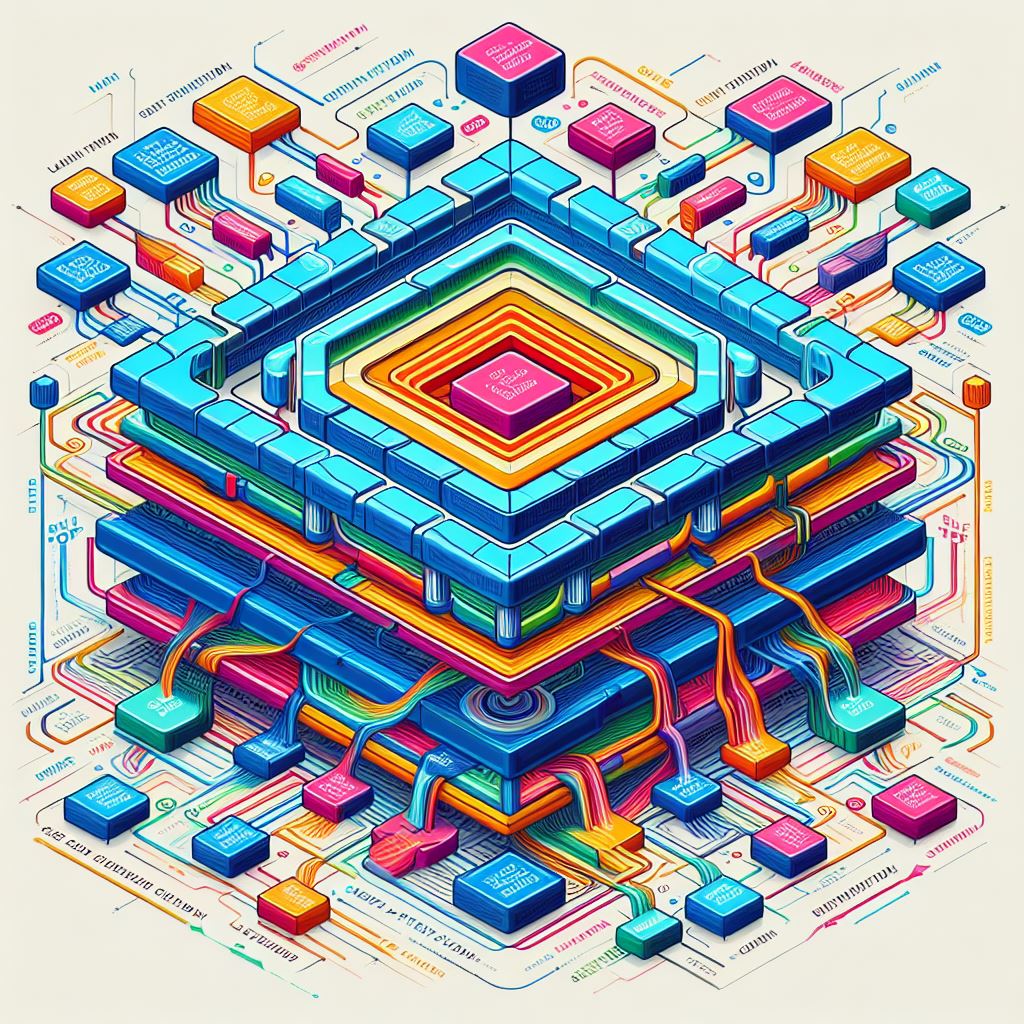

Multi-Head Attention:

1. Motivation:

- Idea: To capture diverse patterns and relationships within the data, the concept of multi-head attention is introduced.

- Benefit: Multiple attention heads operate in parallel, each focusing on different aspects of the input sequence. This enhances the model’s ability to capture a wide range of features.

2. Architecture:

- Parallel Heads: In a multi-head attention mechanism, the input sequence is processed by multiple parallel self-attention layers, each with its set of attention weights.

- Concatenation: The outputs from different attention heads are concatenated and linearly transformed to produce the final output.

Applications in Transformers:

1. Natural Language Processing (NLP):

- Tasks: In tasks such as language translation and sentiment analysis, the self-attention mechanism in Transformers enables the model to capture contextual relationships between words in a sentence.

- Benefit: The ability to consider long-range dependencies makes Transformers highly effective in understanding and generating human language.

2. Computer Vision:

- Tasks: In computer vision tasks, especially with Vision Transformers (ViTs), self-attention proves valuable for processing visual information and understanding contextual dependencies in images.

- Benefit: Transformers, with their self-attention mechanism, have achieved state-of-the-art results in image classification, object detection, and segmentation.

Challenges and Future Directions:

1. Computational Complexity:

- Challenge: The self-attention mechanism can be computationally expensive, especially for large models and datasets.

- Future Direction: Ongoing research focuses on developing more efficient variants of attention mechanisms to improve scalability.

2. Interpretable Representations:

- Challenge: Understanding how attention is distributed across different elements in a sequence can be complex.

- Future Direction: Researchers are exploring methods to enhance the interpretability of attention mechanisms, making them more transparent and understandable.

Conclusion:

The self-attention mechanism is a foundational concept that has revolutionized the field of deep learning. Its ability to capture contextual relationships and long-range dependencies has propelled models like Transformers to the forefront of various applications. As research continues, the self-attention mechanism remains a focal point for innovation, driving advancements in model efficiency, interpretability, and adaptability. Understanding its intricacies is key to unlocking the full potential of this transformative concept in the ever-evolving landscape of artificial intelligence.